mirror of

https://github.com/Steffo99/unimore-hpc-assignments.git

synced 2025-04-18 12:30:31 +00:00

HPC CUDA Lab Appendix

This commit is contained in:

parent

9570af5b66

commit

f859ef9daf

10 changed files with 818 additions and 0 deletions

README.md

cuda/appendix

|

|

@ -18,6 +18,7 @@ The exercises related to OpenMP programming model can be found in the folder `op

|

|||

- `cuda\lab1`: CUDA Basics

|

||||

- `cuda\lab2`: CUDA Memory Model

|

||||

- `cuda\lab3`: CUDA Advanced Host Management

|

||||

- `cuda\appendix`: CUDA Nsight Tutorial

|

||||

|

||||

### (Optional)

|

||||

- `challenge`: Parallelize the code with everything you learned and submit the result before *21 May 2021*

|

||||

|

|

|

|||

6

cuda/appendix/.gitignore

vendored

Normal file

6

cuda/appendix/.gitignore

vendored

Normal file

|

|

@ -0,0 +1,6 @@

|

|||

Release/

|

||||

Debug/

|

||||

|

||||

.ptp-sync

|

||||

.settings

|

||||

.ptp-sync-folder

|

||||

21

cuda/appendix/OpenCV-Config.txt

Normal file

21

cuda/appendix/OpenCV-Config.txt

Normal file

|

|

@ -0,0 +1,21 @@

|

|||

# Add Include Dir

|

||||

/usr/include/opencv4/opencv

|

||||

/usr/include/opencv4

|

||||

|

||||

# Add Libs

|

||||

opencv_dnn

|

||||

gomp

|

||||

opencv_gapi

|

||||

opencv_highgui

|

||||

opencv_ml

|

||||

opencv_objdetect

|

||||

opencv_photo

|

||||

opencv_stitching

|

||||

opencv_video

|

||||

opencv_calib3d

|

||||

opencv_features2d

|

||||

opencv_flann

|

||||

opencv_videoio

|

||||

opencv_imgcodecs

|

||||

opencv_imgproc

|

||||

opencv_core

|

||||

246

cuda/appendix/gemm/gemm.cu

Normal file

246

cuda/appendix/gemm/gemm.cu

Normal file

|

|

@ -0,0 +1,246 @@

|

|||

/*

|

||||

* BSD 2-Clause License

|

||||

*

|

||||

* Copyright (c) 2020, Alessandro Capotondi

|

||||

* All rights reserved.

|

||||

*

|

||||

* Redistribution and use in source and binary forms, with or without

|

||||

* modification, are permitted provided that the following conditions are met:

|

||||

*

|

||||

* * Redistributions of source code must retain the above copyright notice, this

|

||||

* list of conditions and the following disclaimer.

|

||||

*

|

||||

* * Redistributions in binary form must reproduce the above copyright notice,

|

||||

* this list of conditions and the following disclaimer in the documentation

|

||||

* and/or other materials provided with the distribution.

|

||||

*

|

||||

* THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

||||

* AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

||||

* IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

||||

* DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

||||

* FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

||||

* DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

||||

* SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

||||

* CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

||||

* OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

||||

* OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

*/

|

||||

|

||||

/**

|

||||

* @file gemm.cu

|

||||

* @author Alessandro Capotondi

|

||||

* @date 12 May 2020

|

||||

* @brief GEMM Kernel

|

||||

*

|

||||

* @see https://dolly.fim.unimore.it/2019/course/view.php?id=152

|

||||

*/

|

||||

|

||||

#include <assert.h>

|

||||

#include <stdio.h>

|

||||

#include <stdlib.h>

|

||||

#include <time.h>

|

||||

|

||||

#define gpuErrchk(ans) \

|

||||

{ \

|

||||

gpuAssert((ans), __FILE__, __LINE__); \

|

||||

}

|

||||

static inline void gpuAssert(cudaError_t code, const char *file, int line, bool abort = true)

|

||||

{

|

||||

if (code != cudaSuccess)

|

||||

{

|

||||

fprintf(stderr, "GPUassert: %s %s %d\n", cudaGetErrorString(code), file, line);

|

||||

if (abort)

|

||||

exit(code);

|

||||

}

|

||||

}

|

||||

|

||||

#define TWO02 (1 << 2)

|

||||

#define TWO04 (1 << 4)

|

||||

#define TWO08 (1 << 8)

|

||||

|

||||

#ifndef N

|

||||

#define N (1 << 10)

|

||||

#endif

|

||||

#ifndef TILE_W

|

||||

#define TILE_W 128

|

||||

#endif

|

||||

#ifndef BLOCK_SIZE

|

||||

#define BLOCK_SIZE 32

|

||||

#endif

|

||||

|

||||

#define SM 64

|

||||

static void reorder(float *__restrict__ a, float *__restrict__ b, int n)

|

||||

{

|

||||

for (int i = 0; i < SM; i++)

|

||||

for (int j = 0; j < SM; j++)

|

||||

b[i * SM + j] = a[i * n + j];

|

||||

}

|

||||

|

||||

static void mm(float *__restrict__ a, float *__restrict__ b, float *__restrict__ c, int n)

|

||||

{

|

||||

for (int i = 0; i < SM; i++)

|

||||

{

|

||||

for (int k = 0; k < SM; k++)

|

||||

{

|

||||

for (int j = 0; j < SM; j++)

|

||||

{

|

||||

c[i * n + j] += a[i * n + k] * b[k * SM + j];

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

void gemm_host(float *a, float *b, float *c, int n)

|

||||

{

|

||||

int bk = n / SM;

|

||||

#pragma omp parallel for collapse(3)

|

||||

for (int i = 0; i < bk; i++)

|

||||

{

|

||||

for (int j = 0; j < bk; j++)

|

||||

{

|

||||

for (int k = 0; k < bk; k++)

|

||||

{

|

||||

float b2[SM * SM];

|

||||

reorder(&b[SM * (k * n + j)], b2, n);

|

||||

mm(&a[SM * (i * n + k)], b2, &c[SM * (i * n + j)], n);

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

__global__ void gemm(float *__restrict__ a, float *__restrict__ b, float *__restrict__ c, int n)

|

||||

{

|

||||

__shared__ float As[BLOCK_SIZE][BLOCK_SIZE];

|

||||

__shared__ float Bs[BLOCK_SIZE][BLOCK_SIZE];

|

||||

|

||||

int ib = blockIdx.y;

|

||||

int jb = blockIdx.x;

|

||||

|

||||

int it = threadIdx.y;

|

||||

int jt = threadIdx.x;

|

||||

|

||||

int a_offset, b_offset, c_offset;

|

||||

|

||||

float Cvalue = 0.0f;

|

||||

for (int kb = 0; kb < (n / BLOCK_SIZE); ++kb)

|

||||

{

|

||||

a_offset = ib * n * BLOCK_SIZE + kb * BLOCK_SIZE;

|

||||

b_offset = kb * n * BLOCK_SIZE + jb * BLOCK_SIZE;

|

||||

As[it][jt] = a[a_offset + it * n + jt];

|

||||

Bs[it][jt] = b[b_offset + it * n + jt];

|

||||

__syncthreads();

|

||||

|

||||

for (int k = 0; k < BLOCK_SIZE; ++k)

|

||||

Cvalue += As[it][k] * Bs[k][jt];

|

||||

|

||||

__syncthreads();

|

||||

}

|

||||

|

||||

c_offset = ib * n * BLOCK_SIZE + jb * BLOCK_SIZE;

|

||||

c[c_offset + it * n + jt] = Cvalue;

|

||||

}

|

||||

|

||||

int main(int argc, char *argv[])

|

||||

{

|

||||

int n = N, iret = 0;

|

||||

float *a, *b, *c, *g;

|

||||

struct timespec rt[2];

|

||||

double wt; // walltime

|

||||

|

||||

if (argc > 1)

|

||||

n = atoi(argv[1]);

|

||||

|

||||

//TODO Update malloc to cudaMallocHost or cudaMallocManaged (if necessary)

|

||||

if (NULL == (a = (float *)malloc(sizeof(*a) * n * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'x'\n");

|

||||

iret = -1;

|

||||

}

|

||||

//TODO Update malloc to cudaMallocHost or cudaMallocManaged (if necessary)

|

||||

if (NULL == (b = (float *)malloc(sizeof(*b) * n * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'y'\n");

|

||||

iret = -1;

|

||||

}

|

||||

//TODO Update malloc to cudaMallocHost or cudaMallocManaged (if necessary)

|

||||

if (NULL == (c = (float *)malloc(sizeof(*c) * n * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'z'\n");

|

||||

iret = -1;

|

||||

}

|

||||

if (NULL == (g = (float *)malloc(sizeof(*g) * n * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'z'\n");

|

||||

iret = -1;

|

||||

}

|

||||

|

||||

if (0 != iret)

|

||||

{

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(a);

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(b);

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(c);

|

||||

free(g);

|

||||

exit(EXIT_FAILURE);

|

||||

}

|

||||

|

||||

//Init Data

|

||||

int _b = rand() % TWO04;

|

||||

int _c = rand() % TWO08;

|

||||

|

||||

#pragma omp parallel for

|

||||

for (int i = 0; i < n * n; i++)

|

||||

{

|

||||

a[i] = _b / (float)TWO02;

|

||||

b[i] = _c / (float)TWO04;

|

||||

c[i] = g[i] = 0.0;

|

||||

}

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

gemm_host(a, b, g, n);

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("GEMM (Host) : %9.3f sec %9.1f GFLOPS\n", wt, 2.0 * n * n * n / (1.0e9 * wt));

|

||||

|

||||

//TODO Remove if unecessary

|

||||

float *d_a, *d_b, *d_c;

|

||||

gpuErrchk(cudaMalloc((void **)&d_a, sizeof(float) * n * n));

|

||||

gpuErrchk(cudaMalloc((void **)&d_b, sizeof(float) * n * n));

|

||||

gpuErrchk(cudaMalloc((void **)&d_c, sizeof(float) * n * n));

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaMemcpy(d_a, a, sizeof(float) * n * n, cudaMemcpyHostToDevice));

|

||||

gpuErrchk(cudaMemcpy(d_b, b, sizeof(float) * n * n, cudaMemcpyHostToDevice));

|

||||

dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

|

||||

dim3 dimGrid((n + (BLOCK_SIZE)-1) / (BLOCK_SIZE), (n + (BLOCK_SIZE)-1) / (BLOCK_SIZE));

|

||||

gemm<<<dimGrid, dimBlock>>>(d_a, d_b, d_c, n);

|

||||

gpuErrchk(cudaPeekAtLastError());

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaMemcpy(c, d_c, sizeof(float) * n * n, cudaMemcpyDeviceToHost));

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("GEMM-v1 (GPU): %9.3f sec %9.1f GFLOPS\n", wt, 2.0 * n * n * n / (1.0e9 * wt));

|

||||

|

||||

for (int i = 0; i < n * n; i++)

|

||||

{

|

||||

iret = *(int *)(g + i) ^ *(int *)(c + i);

|

||||

assert(iret == 0);

|

||||

}

|

||||

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(a);

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(b);

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(c);

|

||||

free(g);

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaFree(d_a));

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaFree(d_b));

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaFree(d_c));

|

||||

|

||||

return 0;

|

||||

}

|

||||

183

cuda/appendix/saxpy/saxpy.cu

Normal file

183

cuda/appendix/saxpy/saxpy.cu

Normal file

|

|

@ -0,0 +1,183 @@

|

|||

/*

|

||||

* BSD 2-Clause License

|

||||

*

|

||||

* Copyright (c) 2020, Alessandro Capotondi

|

||||

* All rights reserved.

|

||||

*

|

||||

* Redistribution and use in source and binary forms, with or without

|

||||

* modification, are permitted provided that the following conditions are met:

|

||||

*

|

||||

* * Redistributions of source code must retain the above copyright notice, this

|

||||

* list of conditions and the following disclaimer.

|

||||

*

|

||||

* * Redistributions in binary form must reproduce the above copyright notice,

|

||||

* this list of conditions and the following disclaimer in the documentation

|

||||

* and/or other materials provided with the distribution.

|

||||

*

|

||||

* THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

||||

* AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

||||

* IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

||||

* DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

||||

* FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

||||

* DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

||||

* SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

||||

* CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

||||

* OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

||||

* OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

*/

|

||||

|

||||

/**

|

||||

* @file saxpy.c

|

||||

* @author Alessandro Capotondi

|

||||

* @date 12 May 2020

|

||||

* @brief Saxpy

|

||||

*

|

||||

* @see https://dolly.fim.unimore.it/2019/course/view.php?id=152

|

||||

*/

|

||||

|

||||

#include <assert.h>

|

||||

#include <time.h>

|

||||

#include <stdlib.h>

|

||||

#include <stdio.h>

|

||||

#include <string.h>

|

||||

#include <math.h>

|

||||

#include <cuda_runtime.h>

|

||||

|

||||

#define gpuErrchk(ans) \

|

||||

{ \

|

||||

gpuAssert((ans), __FILE__, __LINE__); \

|

||||

}

|

||||

static inline void gpuAssert(cudaError_t code, const char *file, int line, bool abort = true)

|

||||

{

|

||||

if (code != cudaSuccess)

|

||||

{

|

||||

fprintf(stderr, "GPUassert: %s %s %d\n", cudaGetErrorString(code), file, line);

|

||||

if (abort)

|

||||

exit(code);

|

||||

}

|

||||

}

|

||||

|

||||

#define TWO02 (1 << 2)

|

||||

#define TWO04 (1 << 4)

|

||||

#define TWO08 (1 << 8)

|

||||

#ifndef N

|

||||

#define N (1 << 27)

|

||||

#endif

|

||||

|

||||

#ifndef BLOCK_SIZE

|

||||

#define BLOCK_SIZE (512)

|

||||

#endif

|

||||

|

||||

/*

|

||||

*SAXPY (host implementation)

|

||||

* y := a * x + y

|

||||

*/

|

||||

void host_saxpy(float * __restrict__ y, float a, float * __restrict__ x, int n)

|

||||

{

|

||||

#pragma omp parallel for simd schedule(simd: static)

|

||||

for (int i = 0; i < n; i++)

|

||||

{

|

||||

y[i] = a * x[i] + y[i];

|

||||

}

|

||||

}

|

||||

|

||||

__global__ void gpu_saxpy(float * __restrict__ y, float a, float * __restrict__ x, int n)

|

||||

{

|

||||

int i = blockIdx.x * blockDim.x + threadIdx.x;

|

||||

if (i < n)

|

||||

y[i] = a * x[i] + y[i];

|

||||

}

|

||||

|

||||

int main(int argc, const char **argv)

|

||||

{

|

||||

int iret = 0;

|

||||

int n = N;

|

||||

float *h_x, *d_x;

|

||||

float *h_y, *d_y;

|

||||

float *h_z;

|

||||

float a = 101.0f / TWO02,

|

||||

b, c;

|

||||

struct timespec rt[2];

|

||||

double wt; // walltime

|

||||

|

||||

if (argc > 1)

|

||||

n = atoi(argv[1]);

|

||||

|

||||

//TODO Update malloc to cudaMallocHost or cudaMallocManaged (if necessary)

|

||||

if (NULL == (h_x = (float *)malloc(sizeof(float) * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'x'\n");

|

||||

iret = -1;

|

||||

}

|

||||

//TODO Update malloc to cudaMallocHost or cudaMallocManaged (if necessary)

|

||||

if (NULL == (h_y = (float *)malloc(sizeof(float) * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'y'\n");

|

||||

iret = -1;

|

||||

}

|

||||

if (NULL == (h_z = (float *)malloc(sizeof(float) * n)))

|

||||

{

|

||||

printf("error: memory allocation for 'z'\n");

|

||||

iret = -1;

|

||||

}

|

||||

if (0 != iret)

|

||||

{

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(h_x);

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(h_y);

|

||||

free(h_z);

|

||||

exit(EXIT_FAILURE);

|

||||

}

|

||||

|

||||

//Init Data

|

||||

b = rand() % TWO04;

|

||||

c = rand() % TWO08;

|

||||

for (int i = 0; i < n; i++)

|

||||

{

|

||||

h_x[i] = b / (float)TWO02;

|

||||

h_y[i] = h_z[i] = c / (float)TWO04;

|

||||

}

|

||||

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaMalloc((void **)&d_x, sizeof(float) * n));

|

||||

gpuErrchk(cudaMalloc((void **)&d_y, sizeof(float) * n));

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaMemcpy(d_x, h_x, sizeof(float) * n, cudaMemcpyHostToDevice));

|

||||

gpuErrchk(cudaMemcpy(d_y, h_y, sizeof(float) * n, cudaMemcpyHostToDevice));

|

||||

gpu_saxpy<<<((n + BLOCK_SIZE - 1) / BLOCK_SIZE), BLOCK_SIZE>>>(d_y, a, d_x, n);

|

||||

gpuErrchk(cudaPeekAtLastError());

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaMemcpy(h_y, d_y, sizeof(float) * n, cudaMemcpyDeviceToHost));

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("saxpy (GPU): %9.3f sec %9.1f GFLOPS\n", wt, 2 * n / wt);

|

||||

|

||||

//Check Matematical Consistency

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

host_saxpy(h_z, a, h_x, n);

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("saxpy (Host): %9.3f sec %9.1f GFLOPS\n", wt, 2 * n / wt);

|

||||

for (int i = 0; i < n; ++i)

|

||||

{

|

||||

iret = *(int *)(h_y + i) ^ *(int *)(h_z + i);

|

||||

assert(iret == 0);

|

||||

}

|

||||

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(h_x);

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaFree(d_x));

|

||||

//TODO Update cudaFreeHost or cudaFree (if necessary)

|

||||

free(h_y);

|

||||

//TODO Remove if unecessary

|

||||

gpuErrchk(cudaFree(d_y));

|

||||

free(h_z);

|

||||

|

||||

// CUDA exit -- needed to flush printf write buffer

|

||||

cudaDeviceReset();

|

||||

return 0;

|

||||

}

|

||||

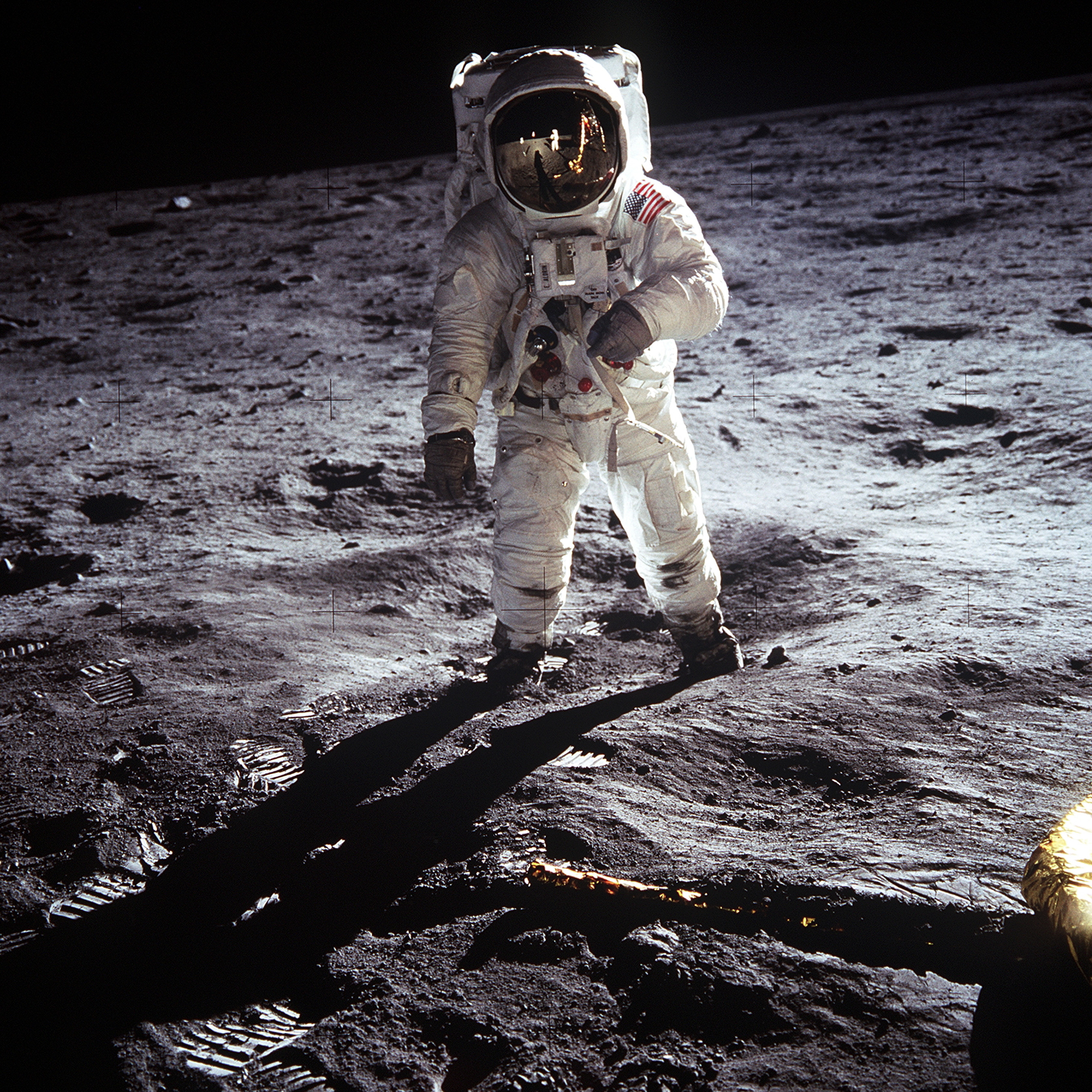

BIN

cuda/appendix/sobel/data/buzz.jpg

Normal file

BIN

cuda/appendix/sobel/data/buzz.jpg

Normal file

Binary file not shown.

|

After

(image error) Size: 2.4 MiB |

BIN

cuda/appendix/sobel/data/daisy.jpg

Normal file

BIN

cuda/appendix/sobel/data/daisy.jpg

Normal file

Binary file not shown.

|

After

(image error) Size: 66 KiB |

BIN

cuda/appendix/sobel/data/earth_rise.jpg

Normal file

BIN

cuda/appendix/sobel/data/earth_rise.jpg

Normal file

Binary file not shown.

|

After

(image error) Size: 2.8 MiB |

BIN

cuda/appendix/sobel/data/fiore.jpg

Normal file

BIN

cuda/appendix/sobel/data/fiore.jpg

Normal file

Binary file not shown.

|

After

(image error) Size: 261 KiB |

361

cuda/appendix/sobel/sobel.cu

Normal file

361

cuda/appendix/sobel/sobel.cu

Normal file

|

|

@ -0,0 +1,361 @@

|

|||

/*

|

||||

* BSD 2-Clause License

|

||||

*

|

||||

* Copyright (c) 2020, Alessandro Capotondi

|

||||

* All rights reserved.

|

||||

*

|

||||

* Redistribution and use in source and binary forms, with or without

|

||||

* modification, are permitted provided that the following conditions are met:

|

||||

*

|

||||

* * Redistributions of source code must retain the above copyright notice, this

|

||||

* list of conditions and the following disclaimer.

|

||||

*

|

||||

* * Redistributions in binary form must reproduce the above copyright notice,

|

||||

* this list of conditions and the following disclaimer in the documentation

|

||||

* and/or other materials provided with the distribution.

|

||||

*

|

||||

* THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

||||

* AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

||||

* IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

||||

* DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

||||

* FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

||||

* DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

||||

* SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

||||

* CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

||||

* OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

||||

* OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

*/

|

||||

|

||||

/**

|

||||

* @file sobel.cu

|

||||

* @author Alessandro Capotondi

|

||||

* @date 5 May 2020

|

||||

* @brief Stencil 2d - Sobel

|

||||

*

|

||||

* @see https://dolly.fim.unimore.it/2019/course/view.php?id=152

|

||||

*/

|

||||

|

||||

#include <stdio.h>

|

||||

#include <time.h>

|

||||

#include <sys/time.h>

|

||||

#include <opencv2/opencv.hpp>

|

||||

#include <opencv2/imgcodecs/imgcodecs.hpp>

|

||||

#include <opencv2/objdetect/objdetect.hpp>

|

||||

#include <opencv2/highgui/highgui.hpp>

|

||||

|

||||

using namespace cv;

|

||||

using namespace std;

|

||||

|

||||

#ifndef BLOCK_SIZE

|

||||

#define BLOCK_SIZE 32

|

||||

#endif

|

||||

|

||||

#define gpuErrchk(ans) \

|

||||

{ \

|

||||

gpuAssert((ans), __FILE__, __LINE__); \

|

||||

}

|

||||

static inline void gpuAssert(cudaError_t code, const char *file, int line, bool abort = true)

|

||||

{

|

||||

if (code != cudaSuccess)

|

||||

{

|

||||

fprintf(stderr, "GPUassert: %s %s %d\n", cudaGetErrorString(code), file, line);

|

||||

if (abort)

|

||||

exit(code);

|

||||

}

|

||||

}

|

||||

|

||||

void sobel_host(unsigned char *__restrict__ orig, unsigned char *__restrict__ out, int width, int height)

|

||||

{

|

||||

#pragma omp parallel for simd collapse(2)

|

||||

for (int y = 1; y < height - 1; y++)

|

||||

{

|

||||

for (int x = 1; x < width - 1; x++)

|

||||

{

|

||||

int dx = (-1 * orig[(y - 1) * width + (x - 1)]) + (-2 * orig[y * width + (x - 1)]) + (-1 * orig[(y + 1) * width + (x - 1)]) +

|

||||

(orig[(y - 1) * width + (x + 1)]) + (2 * orig[y * width + (x + 1)]) + (orig[(y + 1) * width + (x + 1)]);

|

||||

int dy = (orig[(y - 1) * width + (x - 1)]) + (2 * orig[(y - 1) * width + x]) + (orig[(y - 1) * width + (x + 1)]) +

|

||||

(-1 * orig[(y + 1) * width + (x - 1)]) + (-2 * orig[(y + 1) * width + x]) + (-1 * orig[(y + 1) * width + (x + 1)]);

|

||||

out[y * width + x] = sqrt((float)((dx * dx) + (dy * dy)));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

__global__ void sobel_v1(unsigned char *__restrict__ orig, unsigned char *__restrict__ out, int width, int height)

|

||||

{

|

||||

int i = threadIdx.y + blockIdx.y * blockDim.y;

|

||||

int j = threadIdx.x + blockIdx.x * blockDim.x;

|

||||

|

||||

if (j > 0 && i > 0 && j < width - 1 && i < height - 1)

|

||||

{

|

||||

int dx = (-1 * orig[(i - 1) * width + (j - 1)]) + (-2 * orig[i * width + (j - 1)]) + (-1 * orig[(i + 1) * width + (j - 1)]) +

|

||||

(orig[(i - 1) * width + (j + 1)]) + (2 * orig[i * width + (j + 1)]) + (orig[(i + 1) * width + (j + 1)]);

|

||||

int dy = (orig[(i - 1) * width + (j - 1)]) + (2 * orig[(i - 1) * width + j]) + (orig[(i - 1) * width + (j + 1)]) +

|

||||

(-1 * orig[(i + 1) * width + (j - 1)]) + (-2 * orig[(i + 1) * width + j]) + (-1 * orig[(i + 1) * width + (j + 1)]);

|

||||

out[i * width + j] = sqrt((float)((dx * dx) + (dy * dy)));

|

||||

}

|

||||

}

|

||||

|

||||

__global__ void sobel_v2(unsigned char *__restrict__ orig, unsigned char *__restrict__ out, int width, int height)

|

||||

{

|

||||

//TODO Declare i and j: global output indexes

|

||||

int i = threadIdx.y + blockIdx.y * blockDim.y;

|

||||

int j = threadIdx.x + blockIdx.x * blockDim.x;

|

||||

|

||||

//TODO Declare it and jt: Thread row and column of output matrix

|

||||

int it = threadIdx.y;

|

||||

int jt = threadIdx.x;

|

||||

|

||||

//TODO Declare shared input patch

|

||||

__shared__ unsigned char s_in[BLOCK_SIZE][BLOCK_SIZE];

|

||||

|

||||

//TODO Load input patch

|

||||

// Each thread loads one element of the patch

|

||||

s_in[it][jt] = orig[i * width + j];

|

||||

|

||||

//TODO Synchronize to make sure the sub-matrices are loaded

|

||||

// before starting the computation

|

||||

__syncthreads();

|

||||

|

||||

//TODO if block boundary do

|

||||

if (jt > 0 && it > 0 && jt < BLOCK_SIZE - 1 && it < BLOCK_SIZE - 1 && j > 0 && i > 0 && j < width - 1 && i < height - 1)

|

||||

{

|

||||

int dx = (-1 * s_in[it - 1][jt - 1]) + (-2 * s_in[it][jt - 1]) + (-1 * s_in[it + 1][jt - 1]) +

|

||||

(s_in[it - 1][jt + 1]) + (2 * s_in[it][jt + 1]) + (s_in[it + 1][jt + 1]);

|

||||

int dy = (s_in[it - 1][jt - 1]) + (2 * s_in[it - 1][jt]) + (s_in[it - 1][jt + 1]) +

|

||||

(-1 * s_in[it + 1][jt - 1]) + (-2 * s_in[it + 1][jt]) + (-1 * s_in[it + 1][jt + 1]);

|

||||

out[i * width + j] = sqrt((float)((dx * dx) + (dy * dy)));

|

||||

}

|

||||

else if (j > 0 && i > 0 && j < width - 1 && i < height - 1)

|

||||

{

|

||||

//TODO if not-block boundary do (tip check global boundaries)

|

||||

int dx = (-1 * orig[(i - 1) * width + (j - 1)]) + (-2 * orig[i * width + (j - 1)]) + (-1 * orig[(i + 1) * width + (j - 1)]) +

|

||||

(orig[(i - 1) * width + (j + 1)]) + (2 * orig[i * width + (j + 1)]) + (orig[(i + 1) * width + (j + 1)]);

|

||||

int dy = (orig[(i - 1) * width + (j - 1)]) + (2 * orig[(i - 1) * width + j]) + (orig[(i - 1) * width + (j + 1)]) +

|

||||

(-1 * orig[(i + 1) * width + (j - 1)]) + (-2 * orig[(i + 1) * width + j]) + (-1 * orig[(i + 1) * width + (j + 1)]);

|

||||

out[i * width + j] = sqrt((float)((dx * dx) + (dy * dy)));

|

||||

}

|

||||

}

|

||||

|

||||

__global__ void sobel_v3(unsigned char *__restrict__ orig, unsigned char *__restrict__ out, int width, int height)

|

||||

{

|

||||

//TODO Declare i and j: global output indexes (tip: use BLOCK_SIZE-2)

|

||||

int i = threadIdx.y + blockIdx.y * (BLOCK_SIZE - 2);

|

||||

int j = threadIdx.x + blockIdx.x * (BLOCK_SIZE - 2);

|

||||

|

||||

//TODO Declare it and jt: Thread row and column of output matrix

|

||||

int it = threadIdx.y;

|

||||

int jt = threadIdx.x;

|

||||

|

||||

//TODO Check if i and j are out of memory

|

||||

if (i >= width && j >= height)

|

||||

return;

|

||||

|

||||

//TODO Declare shared input patch

|

||||

__shared__ unsigned char s_in[BLOCK_SIZE][BLOCK_SIZE];

|

||||

|

||||

//TODO Load input patch

|

||||

// Each thread loads one element of the patch

|

||||

s_in[it][jt] = orig[i * width + j];

|

||||

|

||||

//TODO Synchronize to make sure the sub-matrices are loaded

|

||||

// before starting the computation

|

||||

__syncthreads();

|

||||

|

||||

//TODO Update block and bound checks

|

||||

if (jt > 0 && it > 0 && jt < BLOCK_SIZE - 1 && it < BLOCK_SIZE - 1 && j > 0 && i > 0 && j < width - 1 && i < height - 1)

|

||||

{

|

||||

int dx = (-1 * s_in[it - 1][jt - 1]) + (-2 * s_in[it][jt - 1]) + (-1 * s_in[it + 1][jt - 1]) +

|

||||

(s_in[it - 1][jt + 1]) + (2 * s_in[it][jt + 1]) + (s_in[it + 1][jt + 1]);

|

||||

int dy = (s_in[it - 1][jt - 1]) + (2 * s_in[it - 1][jt]) + (s_in[it - 1][jt + 1]) +

|

||||

(-1 * s_in[it + 1][jt - 1]) + (-2 * s_in[it + 1][jt]) + (-1 * s_in[it + 1][jt + 1]);

|

||||

out[i * width + j] = sqrt((float)((dx * dx) + (dy * dy)));

|

||||

}

|

||||

}

|

||||

|

||||

__global__ void sobel_v4(unsigned char *__restrict__ orig, unsigned char *__restrict__ out, int width, int height)

|

||||

{

|

||||

//TODO Declare i and j: global output indexes (tip: use BLOCK_SIZE)

|

||||

int i = threadIdx.y + blockIdx.y * blockDim.y;

|

||||

int j = threadIdx.x + blockIdx.x * blockDim.x;

|

||||

|

||||

//TODO Declare it and jt: Thread row and column of output matrix

|

||||

int it = threadIdx.y;

|

||||

int jt = threadIdx.x;

|

||||

|

||||

//TODO Declare shared input patch (tip: use BLOCK_SIZE+2)

|

||||

__shared__ unsigned char s_in[BLOCK_SIZE + 32][BLOCK_SIZE + 32];

|

||||

|

||||

//TODO Load input patch

|

||||

// Each thread loads one element of the patch

|

||||

s_in[it][jt] = orig[i * width + j];

|

||||

|

||||

//TODO Check condition and load remaining elements

|

||||

if ((it + BLOCK_SIZE) < BLOCK_SIZE + 2 && (jt) < BLOCK_SIZE + 2 && (i + BLOCK_SIZE) < width && (j) < height)

|

||||

s_in[it + BLOCK_SIZE][jt] = orig[(i + BLOCK_SIZE) * width + j];

|

||||

|

||||

if ((it) < BLOCK_SIZE + 2 && (jt + BLOCK_SIZE) < BLOCK_SIZE + 2 && (i) < width && (j + BLOCK_SIZE) < height)

|

||||

s_in[it][jt + BLOCK_SIZE] = orig[i * width + j + BLOCK_SIZE];

|

||||

|

||||

if ((it + BLOCK_SIZE) < BLOCK_SIZE + 2 && (jt + BLOCK_SIZE) < BLOCK_SIZE + 2 && (i + BLOCK_SIZE) < width && (j + BLOCK_SIZE) < height)

|

||||

s_in[it + BLOCK_SIZE][jt + BLOCK_SIZE] = orig[(i + BLOCK_SIZE) * width + j + BLOCK_SIZE];

|

||||

|

||||

//TODO Synchronize to make sure the sub-matrices are loaded

|

||||

// before starting the computation

|

||||

__syncthreads();

|

||||

|

||||

//TODO Update all idx adding y +1 and x +1

|

||||

if (jt < BLOCK_SIZE && it < BLOCK_SIZE && j < (width - 2) && i < (height - 2))

|

||||

{

|

||||

int dx = (-1 * s_in[it - 1 + 1][jt - 1 + 1]) + (-2 * s_in[it + 1][jt - 1 + 1]) + (-1 * s_in[it + 1 + 1][jt - 1 + 1]) +

|

||||

(s_in[it - 1 + 1][jt + 1 + 1]) + (2 * s_in[it + 1][jt + 1 + 1]) + (s_in[it + 1 + 1][jt + 1 + 1]);

|

||||

int dy = (s_in[it - 1 + 1][jt - 1 + 1]) + (2 * s_in[it - 1 + 1][jt + 1]) + (s_in[it - 1 + 1][jt + 1 + 1]) +

|

||||

(-1 * s_in[it + 1 + 1][jt - 1 + 1]) + (-2 * s_in[it + 1 + 1][jt + 1]) + (-1 * s_in[it + 1 + 1][jt + 1 + 1]);

|

||||

out[(i + 1) * width + j + 1] = sqrt((float)((dx * dx) + (dy * dy)));

|

||||

}

|

||||

}

|

||||

|

||||

int main(int argc, char *argv[])

|

||||

{

|

||||

int iret = 0;

|

||||

struct timespec rt[2];

|

||||

double wt; // walltime

|

||||

string filename("../data/buzz.jpg");

|

||||

|

||||

if (argc > 1)

|

||||

filename = argv[1];

|

||||

|

||||

// Load Image

|

||||

Mat image = imread(filename, IMREAD_GRAYSCALE);

|

||||

if (!image.data)

|

||||

{

|

||||

cout << "Could not open or find the image" << std::endl;

|

||||

return -1;

|

||||

}

|

||||

int width = image.size().width;

|

||||

int height = image.size().height;

|

||||

|

||||

// Create Output Images

|

||||

Mat out1 = image.clone();

|

||||

Mat out2 = image.clone();

|

||||

Mat result = image.clone();

|

||||

memset(out1.ptr(), 0, sizeof(unsigned char) * width * height);

|

||||

memset(out2.ptr(), 0, sizeof(unsigned char) * width * height);

|

||||

memset(result.ptr(), 0, sizeof(unsigned char) * width * height);

|

||||

|

||||

// Compute CPU Version - Golden Model

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

sobel_host(image.ptr(), out1.ptr(), width, height);

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("Sobel (Host) : %9.6f sec\n", wt);

|

||||

|

||||

//CUDA Buffer Allocation

|

||||

unsigned char *d_image_in;

|

||||

unsigned char *d_image_out;

|

||||

gpuErrchk(cudaMalloc((void **)&d_image_in, sizeof(unsigned char) * width * height));

|

||||

gpuErrchk(cudaMalloc((void **)&d_image_out, sizeof(unsigned char) * width * height));

|

||||

gpuErrchk(cudaMemset(d_image_out, 0, sizeof(unsigned char) * width * height));

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

gpuErrchk(cudaMemcpy(d_image_in, image.ptr(), sizeof(unsigned char) * width * height, cudaMemcpyHostToDevice));

|

||||

dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

|

||||

dim3 dimGrid((width + BLOCK_SIZE - 1) / BLOCK_SIZE, (height + BLOCK_SIZE - 1) / BLOCK_SIZE);

|

||||

sobel_v1<<<dimGrid, dimBlock>>>(d_image_in, d_image_out, width, height);

|

||||

gpuErrchk(cudaPeekAtLastError());

|

||||

gpuErrchk(cudaMemcpy(out2.ptr(), d_image_out, sizeof(unsigned char) * width * height, cudaMemcpyDeviceToHost));

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("Sobel-v1 (GPU) : %9.6f sec\n", wt);

|

||||

|

||||

//Check results

|

||||

absdiff(out1, out2, result);

|

||||

int percentage = countNonZero(result);

|

||||

|

||||

//Reset Output image

|

||||

memset(out2.ptr(), 0, sizeof(unsigned char) * width * height);

|

||||

gpuErrchk(cudaMemset(d_image_out, 0, sizeof(unsigned char) * width * height));

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

gpuErrchk(cudaMemcpy(d_image_in, image.ptr(), sizeof(unsigned char) * width * height, cudaMemcpyHostToDevice));

|

||||

// dim3 dimBlock(BLOCK_SIZE, BLOCK_SIZE);

|

||||

// dim3 dimGrid((width + BLOCK_SIZE - 1) / BLOCK_SIZE, (height + BLOCK_SIZE - 1) / BLOCK_SIZE);

|

||||

sobel_v2<<<dimGrid, dimBlock>>>(d_image_in, d_image_out, width, height);

|

||||

gpuErrchk(cudaPeekAtLastError());

|

||||

gpuErrchk(cudaMemcpy(out2.ptr(), d_image_out, sizeof(unsigned char) * width * height, cudaMemcpyDeviceToHost));

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("Sobel-v2 (GPU) : %9.6f sec\n", wt);

|

||||

|

||||

//Check results

|

||||

absdiff(out1, out2, result);

|

||||

percentage = countNonZero(result);

|

||||

if (percentage)

|

||||

{

|

||||

printf("Divergence %d\n", percentage);

|

||||

imshow("Output GPU", out2);

|

||||

imshow("error diff", result);

|

||||

waitKey(0);

|

||||

}

|

||||

assert(percentage == 0);

|

||||

|

||||

//Reset Output image

|

||||

memset(out2.ptr(), 0, sizeof(unsigned char) * width * height);

|

||||

gpuErrchk(cudaMemset(d_image_out, 0, sizeof(unsigned char) * width * height));

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

gpuErrchk(cudaMemcpy(d_image_in, image.ptr(), sizeof(unsigned char) * width * height, cudaMemcpyHostToDevice));

|

||||

//TODO define dimGrid, dimBlock

|

||||

//TODO add sobel_v4 call

|

||||

dim3 dimBlock_v3(BLOCK_SIZE, BLOCK_SIZE);

|

||||

dim3 dimGrid_v3((width + (BLOCK_SIZE - 2) - 1) / (BLOCK_SIZE - 2), (height + (BLOCK_SIZE - 2) - 1) / (BLOCK_SIZE - 2));

|

||||

sobel_v3<<<dimGrid_v3, dimBlock_v3>>>(d_image_in, d_image_out, width, height);

|

||||

gpuErrchk(cudaPeekAtLastError());

|

||||

gpuErrchk(cudaMemcpy(out2.ptr(), d_image_out, sizeof(unsigned char) * width * height, cudaMemcpyDeviceToHost));

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("Sobel-v3 (GPU) : %9.6f sec\n", wt);

|

||||

|

||||

//Check results

|

||||

absdiff(out1, out2, result);

|

||||

percentage = countNonZero(result);

|

||||

if (percentage)

|

||||

{

|

||||

printf("Divergence %d\n", percentage);

|

||||

imshow("Output GPU", out2);

|

||||

imshow("error diff", result);

|

||||

waitKey(0);

|

||||

}

|

||||

assert(percentage == 0);

|

||||

|

||||

//Reset Output image

|

||||

memset(out2.ptr(), 0, sizeof(unsigned char) * width * height);

|

||||

gpuErrchk(cudaMemset(d_image_out, 0, sizeof(unsigned char) * width * height));

|

||||

|

||||

clock_gettime(CLOCK_REALTIME, rt + 0);

|

||||

gpuErrchk(cudaMemcpy(d_image_in, image.ptr(), sizeof(unsigned char) * width * height, cudaMemcpyHostToDevice));

|

||||

//TODO define dimGrid, dimBlock

|

||||

//TODO add sobel_v4 call

|

||||

sobel_v4<<<dimGrid, dimBlock>>>(d_image_in, d_image_out, width, height);

|

||||

gpuErrchk(cudaPeekAtLastError());

|

||||

gpuErrchk(cudaMemcpy(out2.ptr(), d_image_out, sizeof(unsigned char) * width * height, cudaMemcpyDeviceToHost));

|

||||

clock_gettime(CLOCK_REALTIME, rt + 1);

|

||||

wt = (rt[1].tv_sec - rt[0].tv_sec) + 1.0e-9 * (rt[1].tv_nsec - rt[0].tv_nsec);

|

||||

printf("Sobel-v4 (GPU) : %9.6f sec\n", wt);

|

||||

|

||||

//Check results

|

||||

absdiff(out1, out2, result);

|

||||

percentage = countNonZero(result);

|

||||

if (percentage)

|

||||

{

|

||||

printf("Divergence %d\n", percentage);

|

||||

imshow("Output GPU", out2);

|

||||

imshow("error diff", result);

|

||||

waitKey(0);

|

||||

}

|

||||

assert(percentage == 0);

|

||||

|

||||

gpuErrchk(cudaFree(d_image_out));

|

||||

gpuErrchk(cudaFree(d_image_in));

|

||||

|

||||

return iret;

|

||||

}

|

||||

Loading…

Add table

Reference in a new issue